Description

In real-world problems, the class balance between the test and training dataset often differ, which may cause an estimation bias. The class balance of the test dataset may be estimated in a semi-supervised setup using unlabeled data from the test dataset and labeled data from the training dataset. The method provided below performs this estimation by matching the distributions under the  distance.

distance.

Downloads

A MATLAB implementation and a toy example is given here:

- toyexample.m a toy example

- LSDDPriorEstMedian.m is the function that estimates the class prior. The list of hyper-parameters is centered around the median distance of the data.

Example Usage

To estimate the class-prior from the labeled training data and unlabeled test data, the function LSDDPriorEstMedian.m can be invoked as:

% estimate the class prior

[xi_best, xi_list, LSDE] = LSDDPriorEstMedian(xte, {x1, x2});

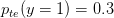

In the figures below, the true class prior is  . The labeled and unlabeled samples were:

. The labeled and unlabeled samples were:

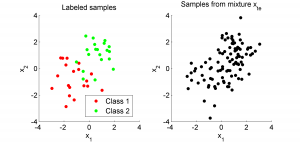

The estimated  -distance and estimated class prior were:

-distance and estimated class prior were:

References

- Sugiyama, M., Suzuki, T., Kanamori, T., du Plessis, M. C., Liu, S., & Takeuchi, I.

Density-difference estimation.

Neural Computation, vol.25, no.10, pp.2734-2775, 2013.

[Paper] - Sugiyama, M., Suzuki, T., Kanamori, T., du Plessis, M. C., Liu, S., & Takeuchi, I.

Density-difference estimation.

In P. Bartlett, F. C. N. Pereira, C. J. C. Burges, L. Bottou, and K. Q. Weinberger (Eds.), Advances in Neural Information Processing Systems 25, pp.692-700, 2012. (Presented at Neural Information Processing Systems (NIPS2012), Lake Tahoe, Nevada, USA, Dec. 3-6, 2012)

[Paper]