Problem formulation

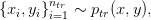

In this problem, we have a fully labeled training dataset:

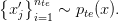

and an unlabeled dataset drawn according to:

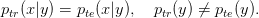

We assume that the test and training datasets only differ by a change in class priors:

The goal is then to get an estimate  , that would allow us to reweight any empirical average

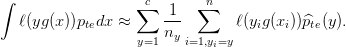

, that would allow us to reweight any empirical average

calculated using the training samples:

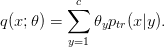

The general strategy that we follow is to have the following model of the test input density:

We then select  so that the model

so that the model  is the same as

is the same as  . To compare

. To compare  and

and  , we

, we

use a divergence (such as an  -divergence or

-divergence or  -distance). These can in turn be directly estimated from samples (avoiding density estimation).

-distance). These can in turn be directly estimated from samples (avoiding density estimation).

We provide implementations for two methods:

Note that experimentally, the  -method seems to give the best results.

-method seems to give the best results.