(work in progress)

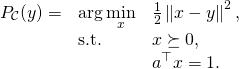

Many convex optimization problems can be solved, fairly simply, if the projection onto the constraints can be quickly and simply calculated. By projection onto the constraints ![]() , we mean the solution to the following optimization problem:

, we mean the solution to the following optimization problem:

(1) ![]()

As an example, the subgradient descent method can incorporate the projection operator to deal with constraints. Here we focus on projection on a simplex: ![]() . The objective function that we wish to solve is:

. The objective function that we wish to solve is:

(2)

As a test case, we can implement the projection in cvxpy:

import cvxpy as cvx

import numpy as np

def proj_simplex_cvxpy(a, y):

'''

Returns the point in the simplex a^Tx = 1, x>=0 that is

closest to y (according to Euclidian distance)

'''

d = len(a)

# setup the objective and constraints and solve the problem

x = cvx.Variable(d)

obj = cvx.Minimize(cvx.sum_squares(x - y))

constr = [x >= 0, a*x == 1]

prob = cvx.Problem(obj, constr)

prob.solve()

return np.array(x.value).squeeze()

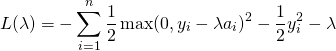

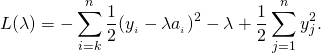

We can write the dual as:

![Rendered by QuickLaTeX.com \begin{align*} L(\lambda) & = \min_{x} \sum_{i=1}^{n}\left[\frac{1}{2}(x_i - y_i)^2 + \lambda a_i x_i \right] -\lambda\qquad \textrm{s.t.} \quad x \succeq 0, \\ & =\min_{x} \sum_{i=1}^{n}\left[\frac{1}{2}x_i^2 - x_i[y_i - \lambda a_i]- \frac{1}{2}y_i^2\right] - \lambda \qquad \textrm{s.t.} \quad x \succeq 0. \end{align*}](http://www.mcduplessis.com/wp-content/ql-cache/quicklatex.com-ec69243cdbdcd49401aaa472a9e2eaa1_l3.png)

Since the ![]() decouples (for fixed

decouples (for fixed ![]() ) it can be solved as:

) it can be solved as:

![]()

The dual objective (which is to be maximized) is therefore:

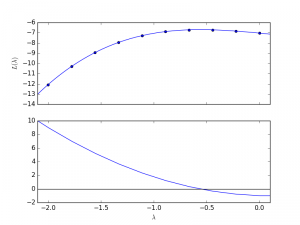

An example of the dual function is given below (source code is here):

As ![]() is decreased from a large value, the values of

is decreased from a large value, the values of ![]() will “switch on” from

will “switch on” from ![]() . To determine where the variables will switch on we sort the values according to

. To determine where the variables will switch on we sort the values according to ![]() .

.

Consider that we have an index ![]() that sorts according to

that sorts according to ![]() . In other words:

. In other words:

![]()

We can refer to these as our indexed ![]() values,

values,

![]()

Suppose that we have a ![]() value. We then want to find an index

value. We then want to find an index ![]() , so that

, so that

![]()

We can then write the objective function as:

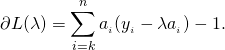

The derivative of the above is therefore:

By searching on the sorted list, we get an index ![]() , such that

, such that ![]() , but

, but ![]() .

.

The optimal lambda value can then be calculated as:

![]()

The final algorithm is:

def proj(a, y):

l = y/a

idx = np.argsort(l)

d = len(l)

evalpL = lambda k: np.sum(a[idx[k:]]*(y[idx[k:]] - l[idx[k]]*a[idx[k:]]) ) -1

def bisectsearch():

idxL, idxH = 0, d-1

L = evalpL(idxL)

H = evalpL(idxH)

if L<0:

return idxL

while (idxH-idxL)>1:

iMid = int((idxL+idxH)/2)

M = evalpL(iMid)

if M>0:

idxL, L = iMid, M

else:

idxH, H = iMid, M

return idxH

k = bisectsearch()

lam = (np.sum(a[idx[k:]]*y[idx[k:]])-1)/np.sum(a[idx[k:]])

x = np.maximum(0, y-lam*a)

return x

Other resources:

- MATLAB implementation of projection onto unit simplex (description here)

- Michelot, C. (1986). A finite algorithm for finding the projection of a point onto the canonical simplex of∝ n. Journal of Optimization Theory and Applications, 50(1), 195-200.

Todo:

- Implement the code in C/C++

- Give a better test case

- Simplify the explanation and implementation

- Give a practical example (e.g. estimation problem with subgradient descent)